Distributed Systems Assignment 4 Community Test Suite

Description

This is a test suite designed to test various cases in assignment 4. It is designed to be easy to both test and add additional tests. Community contributions are encouraged.

How to use

Downloading

To use this program, it is recommended to clone the project project or add it using the package manager. In the root directory of your project (with the Dockerfile)

git clone git@git.ucsc.edu:awaghili/cse138-assignment-4-test-suite.gitUV Package Manager Users

Alternatively, if you use the uv package manager, you can use uvx to run the tests, without needing to manually download or install anything.

This package manager is generally fast enough that redownloading the package from git and the dependencies every time you run the command is not

a problem.

Instructions for installing uv here.

uvx --force-reinstall --from git+https://git.ucsc.edu/awaghili/cse138-assignment-4-test-suite#subdirectory=pkg cse138-asgn4-tests

# You can also pass arguments as normal:

uvx --force-reinstall --from git+https://git.ucsc.edu/awaghili/cse138-assignment-4-test-suite#subdirectory=pkg cse138-asgn4-tests --no-build

# Or when the repository is cloned locally:

uvx --force-reinstall --from /path/to/repo/cse138-assignment-4-test-suite/pkg cse138-asgn4-testsRunning Tests

Running all tests is a simple. Simply run (just like assignment two)

python3 -m cse138-assignment-4-test-suiteThe tests are segmented into categories:

- basic

- availability

- eventual consistency

- causual consistency

To run a single category of tests

To run a individual test

Debugging Tests

Each test run is saved in a

./test_results/YY_MM_DD_HH:MM:SSdirectory. The directory has the following structure:

- summary.txt

- basic/

- availability/

- eventual_consistency/

- causal_consistency/

The summary contains the overall result of the tests. Each categories directory contains a directory for each test in the category. Each test's directory holds a result.txt, a replay.txt (what messages were sent when, to where, from who, etc.), and logs from each server.

Command Line Arguments

By default, the image will be built on each invocation of the test script. For people with slow builds, this can be skipped

with the --no-build flag.

(Currently broken) The script will also stop after the first failure. To run all tests, use the --run-all flag.

To filter tests by name, add the name or a substring of the name as the positional argument.

The main script accepts the following command line arguments:

usage: python3 -m cse138-assignment-4-test-suite [-h] [--no-build] [--run-all] [filter]

positional arguments:

filter filter tests by name (optional)

options:

-h, --help show this help message and exit

--no-build skip building the container image

--run-all run all tests instead of stopping at first failure. Note: this is currently broken due to issues with cleanup code

--num-threads <NUM_THREADS>

number of threads to run tests in

--port-offset <PORT_OFFSET>

port offset for each test (default: 1000)Running tests in parallel

The number of threads to run tests in can be set with the --num-threads flag. The default is 1. --num-threads greater than 1 causes all

tests to be run, and there is no way to cancel ongoing tests on the first failure.

Note that using too many threads can cause too many docker networks to be created, which will result in tests tests failing with the following error message:

Error response from daemon: all predefined address pools have been fully subnettedI believe this has to do with the default options for docker giving large subnets to each network, so there are fewer available networks (I think 32 by default). If this happens, you can try to change the docker config, or just lower the number of threads until the error goes away.

Community Contributions

I will not be able to do most of the extensive testing on my own. I encourage all teams to use this as a core resource for testing by both using and contributing to the test suite. However, to ensure correct and orderly testing, I will utilize a pull request format where submissions will go through a review process. I also will require some components to ensure that the testing is easy to follow and use. The test requirements are listed below. While it will be more tedious than just cranking out a bunch of scuffed tests, I believe this format will be better as it will be clear what the test is doing and what it is for (if you really don't want to do the steps, just do a PR with your code, and if someone else will add the context then it maybe approved).

Test Requirements

Basics

Tests must have the following name format: category_type_# ex. availability_advanced_2

Test Description

Each test requires a short description that described the overall use of the test. Include in this description any special behaviors, clients, servers, and partitions. This should be brief enough that it is clear what the test does in three to four sentences.

Explicit Violation

For non-basic tests, described what explicit violation you are looking for in the test (aka the finite witness). Note: For the liveness property of eventually consistency, the time limit is 10 seconds after which point a finite witness of eventual consistency can be shown. If applicable, point out where you expect this violation to be and how it violates the property described. Then describe the expected behavior.

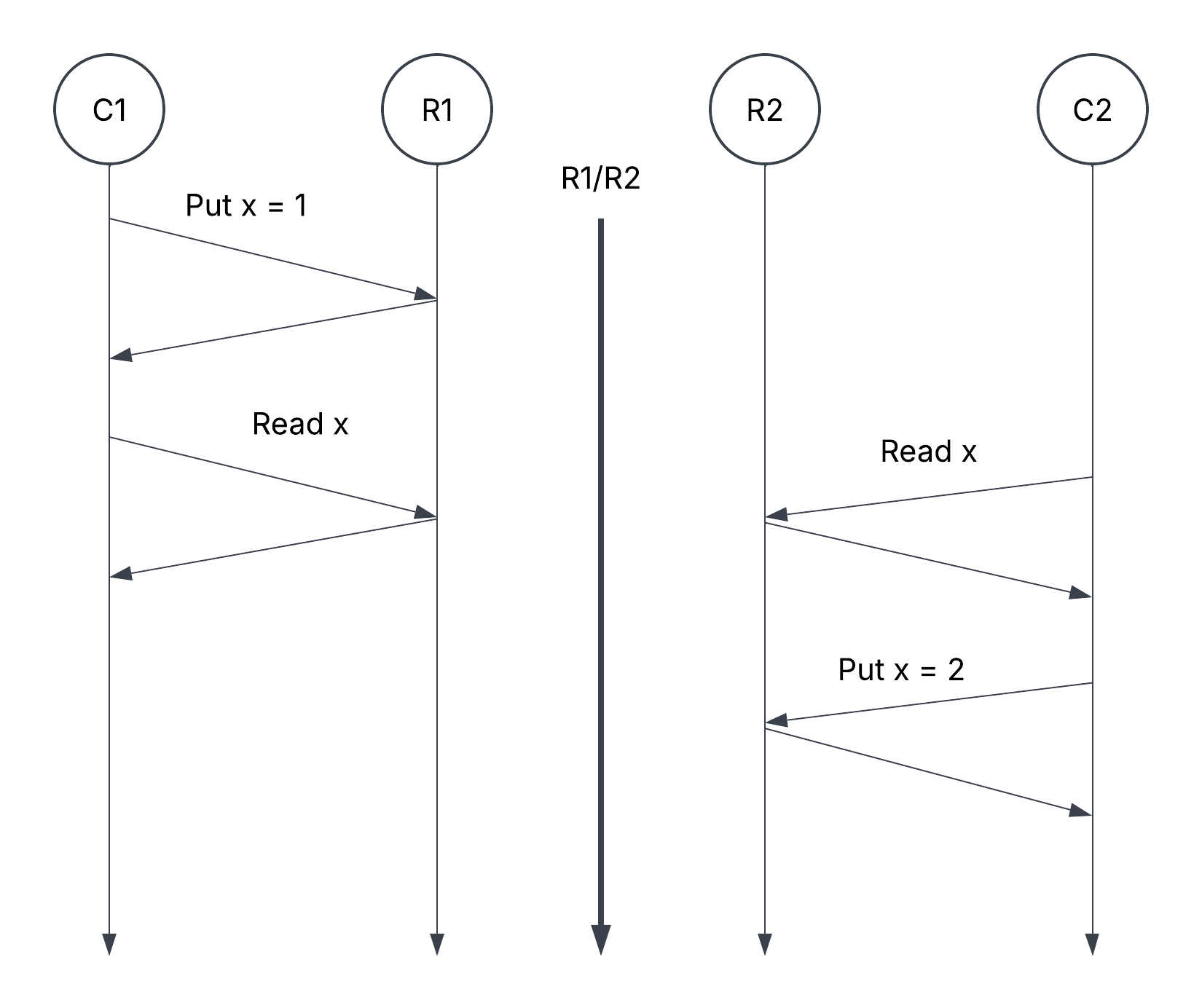

Lamport Diagram

Utilize a basic lamport diagram that shows the test. It does not have to exactly mirror the test (eg you have 100 clients, you don't need to show all 100 clients), just a diagram that gets the point across for the test. This, again, can be very basic. Ensure that the happens-before relation is accurately modeled. When showing partitions, outline the details of the partition above the symbol in the diagram. An example is shown below:

Note: You can use a hand drawing, google drawings, or whatever floats your boat as long as its readable. I used a software product called LucidChart here.

Note: You can use a hand drawing, google drawings, or whatever floats your boat as long as its readable. I used a software product called LucidChart here.

Code Comments and Assertions

Assertions are critical to show what behavior the system expects. Ensure that the behavior is not tailored to your specific implementation but just the described properties. Code comments are not required but should be used to help guide the test (just as seen above).

Pull Request

Tests

In order to make the collaborative environment work, a pull request system will be utilized. First, create a test (or many) and add the appropriate documents and adjustments for each test directory:

- README.md (w/ Test Description, Explicit Violation, and Lamport Diagram as image)

- test_name.py (with proper test)

- adding test to category Add to the PR request the header: "ADD TEST(s)" before adding the proper title. Example: Example Merge Request

Other changes

If you want to suggest a change other then a test, please create a PR (or an issue beforehand if you aren't sure it'll be an improvement) that describe what was wrong (or could be improved), why you're suggested fix will work better, and any effect on existing tests it will have.